Synchronous and asynchronous execution has a close relationship with parallelism and concurrency, two important concepts in computing that describe how multiple tasks can be handled. While they all deal with managing tasks, each term focuses on a different aspect of task execution. Let’s break down how synchronous, asynchronous, parallel, and concurrent execution are related.

Table of Contents

Introduction

- Synchronous: One task at a time, blocking.

- Asynchronous: Tasks run without waiting for each other, non-blocking.

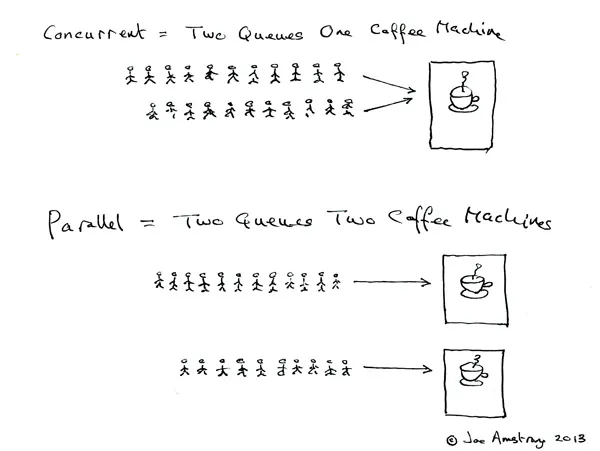

- Concurrency: Multiple tasks overlap but don’t necessarily run simultaneously.

- Parallelism: Tasks run truly simultaneously on multiple CPU cores.

Handling Waiting:

- Synchronous: Blocks execution until the current task is complete, which can lead to inefficiencies during wait times.

- Asynchronous: Allows execution to continue without waiting, freeing up resources for other tasks.

Execution:

- Parallel: Runs multiple tasks simultaneously across different processors or cores, maximizing resource use.

- Concurrency: Manages multiple tasks by interleaving them, creating the appearance of simultaneous execution even on a single processor.

Read Synchronous and Asynchronous Concurrent and Parallel Computing

Synchronous and Asynchronous

How tasks handle waiting

Synchronous

- Blocking: In synchronous execution, when a task is running, the system must wait for it to finish before moving on to the next one. This is called blocking behaviour.

- Example: Consider a scenario where you are reading a file. If you use a synchronous method to read the file, the program will halt at that point until the entire file is read. During this time, no other code can execute, effectively making the system idle.

- Use Cases: Synchronous execution is easy to understand, making it suitable for tasks where order and timing are critical, such as sequential operations (like mathematical calculations).

Asynchronous

- Non-Blocking: In asynchronous execution, tasks can start and continue without waiting for previous tasks to complete. This is known as non-blocking behaviour.

- Example: Imagine you are sending a request to a server to fetch data. With an asynchronous method, you can send the request and then continue executing other code (like updating the user interface) while waiting for the server’s response. Once the response is ready, a callback or promise will handle it.

- Use Cases: Asynchronous execution is ideal for I/O-bound operations, like web requests or file operations, where waiting for a response is expected, allowing other operations to proceed.

Parallel and Concurrency:

How tasks are executed.

Parallel Execution:

- Simultaneous Execution: In parallel execution, multiple tasks are executed at the same time, often on separate processing units (like multiple CPU cores). This truly allows tasks to run concurrently, maximizing resource utilization.

- Example: In a data processing application, you might split a large dataset into chunks and process each chunk on a different core. If you have four CPU cores, you can process four chunks simultaneously, reducing the total processing time.

- Use Cases: Parallel execution is beneficial for CPU-bound tasks that can be divided into smaller, independent subtasks (e.g., image processing, and numerical simulations).

Concurrency:

- Interleaved Execution: Concurrency, on the other hand, refers to managing multiple tasks that may not run at the same time but appear to progress simultaneously. This can be achieved by rapidly switching between tasks (time-slicing).

- Example: In a single-core CPU, when running a web server, the server can handle multiple incoming requests. It may process one request, pause it when it waits for a database response, and start processing another request. This creates the illusion that multiple requests are being handled at once.

- Use Cases: Concurrency is useful for handling multiple I/O-bound tasks where waiting (e.g., for user input or network responses) is common, allowing the system to remain responsive even with a single processor.

Synchronous + Concurrency

At first glance, synchronous and concurrency execution at the same time seem incompatible as in a single-core system, if the processor synchronously executes task 1, it cannot pick up another task until task 1 is completed.

Then how on older systems with a single core, multiple applications run simultaneously?

It’s because of multithreading and context switching.

So we can achieve synchronous and concurrency using those concepts.

- The operating system uses a technique called time slicing, where it rapidly switches between different threads or processes. Although only one thread executes at a time, the switching happens quickly enough to give the illusion of simultaneous execution.

- When the operating system switches from one thread to another, it saves the current thread’s state and loads the next one’s state. This process is called context switching and allows the system to maintain the progress of multiple tasks.

Example:

- A single-core computer runs a simple application that manages two synchronous tasks, T1 and T2. The operating system (OS) creates one thread for each task.

- Although both tasks are synchronous and must be completed in order, the OS employs context switching to manage their execution. This allows the OS to alternate between T1 and T2, giving the illusion of parallel execution.

- While this approach enhances responsiveness, it also introduces some overhead due to the time spent saving and restoring thread states.

Example

Multithreading on a single-core CPU.

- Scenario: Imagine a system where you have multiple tasks that appear to run at the same time but are actually taking turns. For example, a program reads a large file line by line while also performing other background operations like logging data or updating a progress bar.

- How It Works: The CPU switches between tasks so quickly that it appears they are running concurrently, but only one task is actually running at any given time.

import threading

def task1():

print("Task 1 is starting...")

time.sleep(2) # Simulating work

print("Task 1 is complete.")

def task2():

print("Task 2 is starting...")

time.sleep(1) # Simulating work

print("Task 2 is complete.")

# Creating threads

thread1 = threading.Thread(target=task1)

thread2 = threading.Thread(target=task2)

thread1.start() # Start task1

thread2.start() # Start task2

thread1.join() # Wait for task1 to finish

thread2.join() # Wait for task2 to finish

print("All tasks are complete.")Output

Task 1 is starting...

Task 2 is starting...

Task 2 is complete.

Task 1 is complete.

All tasks are complete.Threads switch between task1() and task2(), but since it’s synchronous, each task still blocks itself until it completes its current operation.

Asynchronous + Concurrency

- Asynchronous execution, by design, promotes concurrency. Asynchronous tasks can start, pause, or wait for an operation (like an I/O request) to complete while other tasks are being processed. This makes it possible to handle multiple operations concurrently, improving responsiveness and efficiency in handling I/O-bound or non-blocking tasks.

- Concurrency in Asynchronous Systems: Asynchronous programming allows tasks to be executed concurrently, even on a single-core machine, by interleaving tasks without blocking. For example, in JavaScript’s

async/awaitor Python’sasyncio, multiple I/O operations can be handled concurrently.

Example:

Asynchronous I/O-bound operations (e.g., handling multiple web requests).

- Scenario: A web server handles multiple client requests concurrently. While one request waits for a database response, other requests are processed, without waiting for one task to finish before moving to the next.

- How It Works: Non-blocking I/O allows tasks to overlap in time, but not all tasks are running at the same time (as they are still limited by a single-core CPU or shared resources).

import asyncio

async def task_a():

await asyncio.sleep(2)

print("Task A finished")

async def task_b():

await asyncio.sleep(1)

print("Task B finished")

asyncio.run(asyncio.gather(task_a(), task_b()))Both task_a() and task_b() run concurrently but without blocking each other. They “wait” during the sleep() without blocking execution, allowing other tasks to proceed.

Read about Asyncio

Synchronous + Parallelism

- Parallelism in a synchronous system is possible, but each task must still follow the blocking behaviour of synchronous execution. However, in parallel systems (like multi-threading or multi-processing), even synchronous tasks can run simultaneously if allocated to different processors.

- Parallelism in Synchronous Systems: You could have multiple tasks executing in parallel, but each task is blocking within its own thread or process. For example, two threads might each run a CPU-intensive synchronous task at the same time, but the tasks will still block within each thread.

Example:

Multi-core processing of independent synchronous tasks.

- Scenario: Imagine a system where two computationally intensive tasks (like image processing) are executed on different CPU cores. Each task is processed synchronously (in a step-by-step fashion) but on separate cores, allowing them to run in parallel.

- How It Works: Each task runs on a different processor core, executing in parallel, but within each core, the tasks themselves are synchronous (blocking).

from multiprocessing import Process

import time

def task_a():

print("Task A started")

time.sleep(3)

print("Task A finished")

def task_b():

print("Task B started")

time.sleep(2)

print("Task B finished")

# Running tasks in parallel on different processors

process_a = Process(target=task_a)

process_b = Process(target=task_b)

process_a.start()

process_b.start()

process_a.join()

process_b.join()Each task runs in parallel on different processors, but task_a() and task_b() themselves are synchronous, so they block within their own processes.

Asynchronous + Parallelism

- Asynchronous systems are well-suited to achieving parallelism, especially when combined with multi-threading or multi-core architectures. Each asynchronous task can be assigned to different processors, allowing true parallel execution of tasks.

- Parallelism in Asynchronous Systems: Asynchronous tasks (e.g., web requests or file reading) can be run in parallel, where tasks don’t block each other. When combined with parallel processing (multi-core CPUs or distributed systems), this can result in highly efficient performance for large-scale applications.

Example

Asynchronous tasks running on multiple cores or distributed systems.

- Scenario: A data processing pipeline where multiple data chunks are processed in parallel on different machines, and each task runs asynchronously (e.g., fetching data, processing it, and sending results back).

- How It Works: Multiple asynchronous tasks are executed in parallel across multiple processors or machines. Each task performs non-blocking operations, such as sending data over a network, while other tasks run in parallel.

import asyncio

from concurrent.futures import ProcessPoolExecutor

def cpu_bound_task(task_num):

print(f"Task {task_num} started")

# Simulating a heavy computation

sum([i * i for i in range(10**6)])

print(f"Task {task_num} finished")

async def run_in_parallel():

loop = asyncio.get_event_loop()

with ProcessPoolExecutor() as pool:

await asyncio.gather(

loop.run_in_executor(pool, cpu_bound_task, 1),

loop.run_in_executor(pool, cpu_bound_task, 2),

)

# Running tasks asynchronously in parallel

asyncio.run(run_in_parallel())Each cpu_bound_task() runs asynchronously on separate processors, achieving both parallelism and non-blocking execution. This is useful for handling high-performance workloads.

1 thought on “Understanding Parallelism, Asynchronous, Synchronous, Concurrency”