In today’s digital world, managing the flow of data and requests between clients and servers is critical for maintaining the performance, security, and availability of web services. One key technique used to achieve this is rate limiting. This blog will delve into the concept of rate limiting, its importance, methods, and best practices.

Table of Contents

What is Rate Limiting?

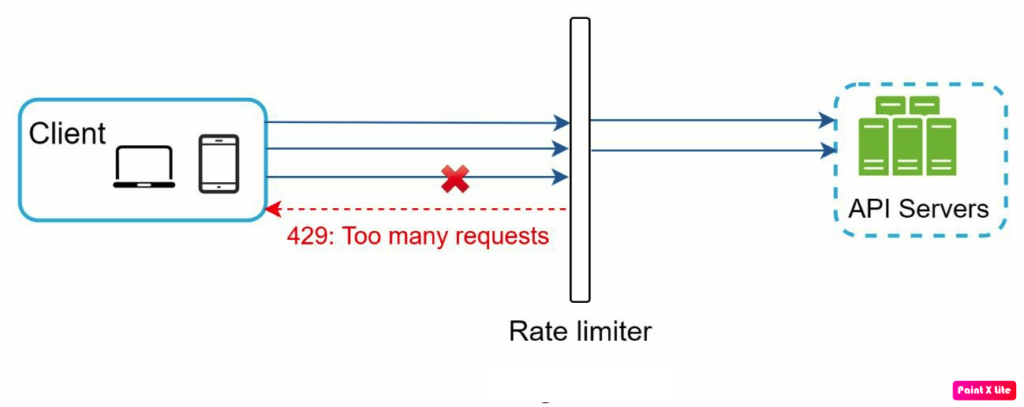

Rate limiting is a technique used to control the amount of incoming and outgoing traffic to or from a network. Essentially, it restricts the number of requests a user can make to a server within a specific time frame. This is crucial for preventing abuse, ensuring fair usage, and maintaining the stability and performance of the server.

Image source System Design Interview — An insider’s Guide by Alex Xu

Advantages of Rate Limiting

- Prevents Abuse and DDoS Attacks: Rate limiting helps mitigate denial-of-service (DoS) and distributed denial-of-service (DDoS) attacks by limiting the number of requests an attacker can make. This protection ensures that malicious users cannot overwhelm your server with excessive requests, keeping your services available to legitimate users.

- Ensures Fair Usage: By controlling the request rate, rate limiting ensures that no single user can monopolize server resources. This fairness is particularly important in multi-tenant environments, where resources are shared among many users.

- Improves System Performance and Stability: Rate limiting can prevent server overload, which can lead to crashes or degraded performance. By capping the number of requests, you can ensure that your system remains responsive and stable, providing a better user experience.

- Cost Management: In cloud-based environments, resources such as bandwidth, CPU, and memory are often billed based on usage. Rate limiting helps control these costs by preventing excessive use, thus avoiding unexpected expenses.

- Enhances Security: Rate limiting can protect against brute force attacks on login pages or APIs by restricting the number of attempts a user can make. This security measure reduces the risk of unauthorized access.

- Data Integrity: By controlling the rate of write operations, rate limiting helps maintain data integrity, especially in databases. It prevents scenarios where rapid, uncontrolled writes could lead to data corruption or inconsistent states.

How Does Rate Limiting Work?

Rate limiting can be implemented in various ways, depending on the needs and infrastructure of the system. Here are some common methods:

- Token Bucket

- Leaky Bucket

- Fixed window counter

- Sliding window logs

- Sliding window counter

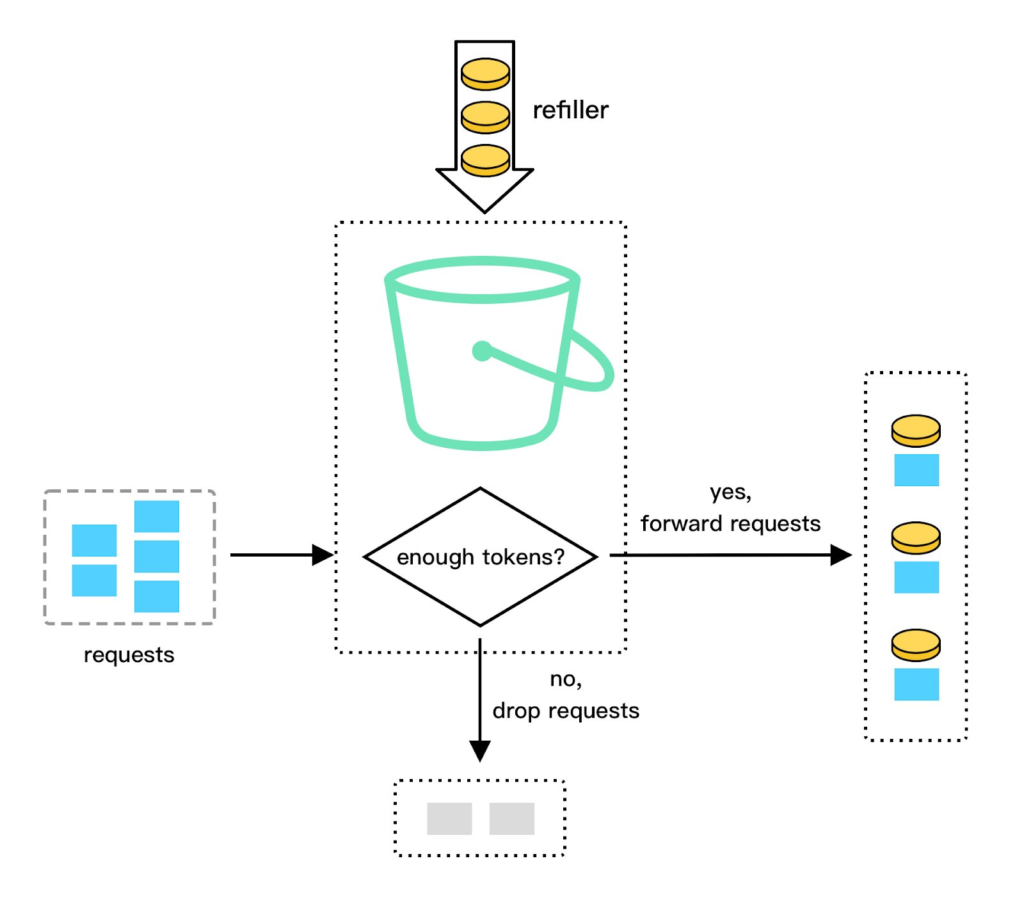

Token bucket algorithm

- A bucket holds tokens, each representing the right to make a request.

- Tokens are added to the bucket at a fixed rate.

- Each incoming request consumes a token.

- The request is denied if the bucket is empty until more tokens are available.

Leaking bucket algorithm

The leaking bucket algorithm is similar to the token bucket except that requests are processed at a fixed rate. It is usually implemented with a first-in-first-out (FIFO) queue. The algorithm works as follows:

- When a request arrives, the system checks if the queue is full. If it is not full, the request is added to the queue.

- Otherwise, the request is dropped.

- Requests are pulled from the queue and processed at regular intervals.

Fixed window counter algorithm

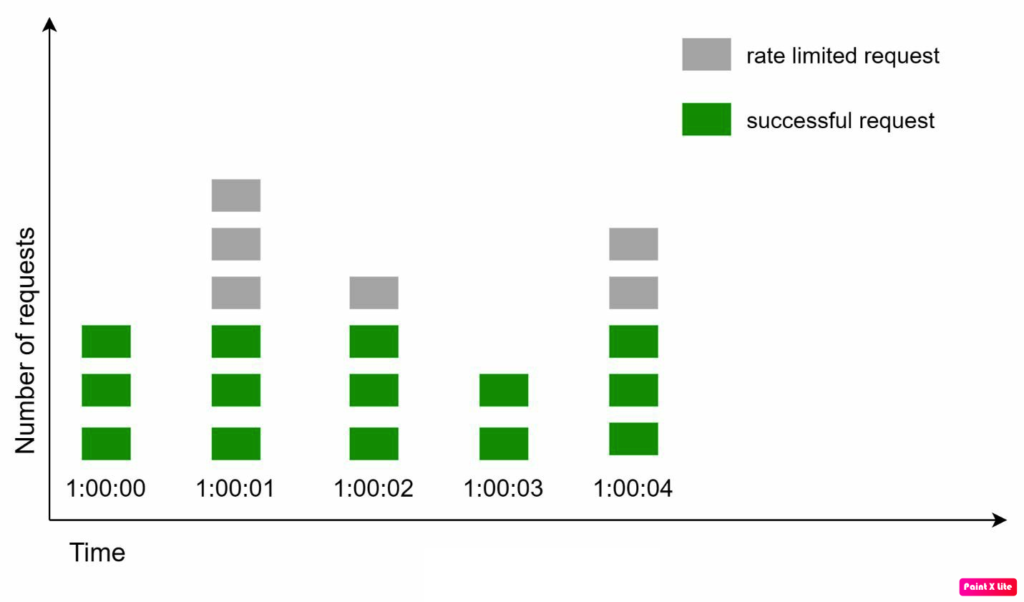

The fixed window counter algorithm works as follows:

- The algorithm divides the timeline into fixed-sized time windows and assigns a counter for each window.

- Each request increments the counter by one.

- Once the counter reaches the pre-defined threshold, new requests are dropped until a new time window starts.

- A major problem with this algorithm is that a burst of traffic at the edges of time windows could cause more requests than the allowed quota.

- For example, if the window size is 1 minute and the limit is 100 requests per minute, it is possible to send 100 requests at the very end of one window and another 100 requests at the very beginning of the next window, resulting in 200 requests in a very short time.

Sliding window log algorithm

The fixed window counter algorithm has a major issue: it allows more requests to go through at the edges of a window. The sliding window log algorithm fixes the issue. It works as follows:

- The algorithm keeps track of request timestamps. Timestamp data is usually kept in a cache, such as sorted sets of Redis.

- When a new request comes in, remove all the outdated timestamps. Outdated timestamps are defined as those older than the start of the current time window.

- Add the timestamp of the new request to the log.

- If the log size is the same or lower than the allowed count, a request is accepted. Otherwise, it is rejected.

In this example, the rate limiter allows 2 requests per minute. Usually, Linux timestamps are stored in the log. However, a human-readable representation of time is used in our example for better readability.

- The log is empty when a new request arrives at 1:00:01. Thus, the request is allowed.

- A new request arrives at 1:00:30, the timestamp 1:00:30 is inserted into the log. After the insertion, the log size is 2, not larger than the allowed count. Thus, the request is allowed.

- A new request arrives at 1:00:50, and the timestamp is inserted into the log. After the insertion, the log size is 3, larger than the allowed size 2. Therefore, this request is rejected even though the timestamp remains in the log.

- A new request arrives at 1:01:40. Requests in the range [1:00:40,1:01:40) are within the latest time frame, but requests sent before 1:00:40 are outdated.

- Two outdated timestamps, 1:00:01 and 1:00:30, are removed from the log. After the remove operation, the log size becomes 2; therefore, the request is accepted.

Note:

- Rate limiting implemented by this algorithm is very accurate. In any rolling window, requests will not exceed the rate limit.

- The algorithm consumes a lot of memory because even if a request is rejected, its timestamp might still be stored in memory.

Sliding window counter algorithm

The Sliding Window Counter algorithm is a hybrid approach that combines elements of both the Fixed Window and Sliding Window Log algorithms. It aims to provide a more balanced and efficient rate-limiting mechanism by using counters that are updated based on the sliding window concept.

A sliding window counter is similar to a fixed window counter but it smooths out bursts of traffic by adding a weighted count in the previous window to the count in the current window.

For example,

- Suppose the limit is 4 requests/minute in the above diagram.

- There are 4 requests in the window [1:00:00, 1:01:00) and 2 requests in the window [1:01:00, 1:02:00).

- For 2 requests that arrive at 1:01:15, which is at 25% position of window [1:01:00, 1:02:00), we calculate the request count by the formula: 3 x (1 – 25%) + 2 = 4.25 > 4. Thus we reject this request.

- Even though both windows don’t exceed the limit, the request is rejected because the weighted sum of the previous and current windows does exceed the limit.

- In other words, in window [1:00:15, 1:01:15]: we have 5 requests, thus we have to reject this request.

Pros:

- It is more efficient than Sliding Window Log as it avoids storing individual timestamps.

- Provides a good approximation of the sliding window behaviour.

- Reduces memory usage compared to storing logs.

Cons:

- Less precise than the Sliding Window Log.

- Requires careful tuning of sub-window size.

Rate limiting in the distributed system

In the simple systems, all request comes from the same rate limiter.

In a distributed environment with multiple data centres. The load balancer is required to manage requests across multiple servers and multiple data centres. Hence we need multiple implementations of the rate limiter, as every data centre has its implementation of this middleware.

We must maintain a common cache to maintain status. We need one shared cache across a distributed network.

Conclusion

Rate limiting is a vital technique for managing traffic and ensuring the stability, performance, and security of web services. By understanding the various methods and best practices for rate limiting, you can effectively control the flow of requests to your server and provide a better experience for all users.

Incorporating rate limiting into your system is not just about preventing abuse; it’s about creating a balanced and fair environment that can handle the demands of modern web traffic. Whether you are a developer, system administrator, or security professional, mastering the concept of rate limiting is essential for the robust management of your online services.