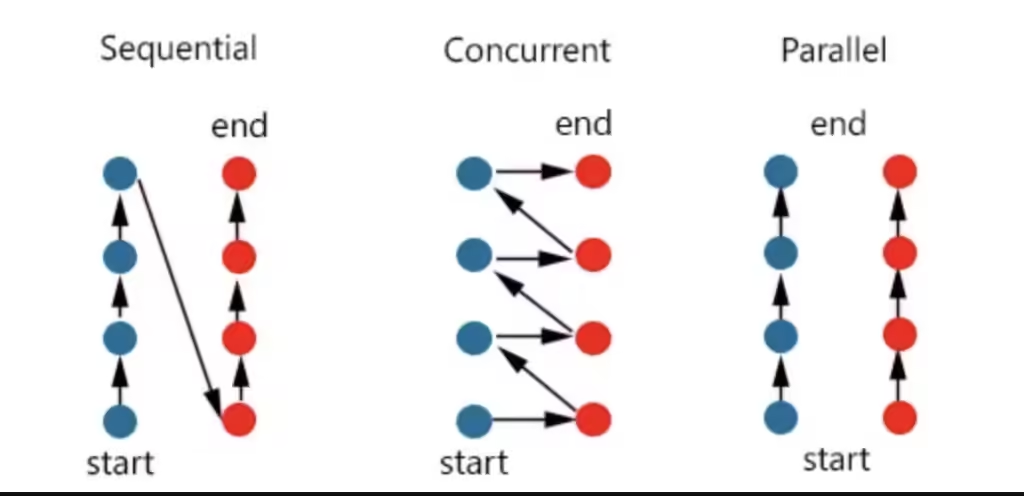

In modern computing, two critical approaches for improving performance and efficiency are concurrent and parallel computing. While these terms are often used interchangeably, they refer to distinct concepts in the field of computer science. This article explores these concepts, their differences, and their practical applications.

Table of Contents

Introduction to Concurrent and Parallel Computing

Both concurrent and parallel computing revolve around performing multiple tasks simultaneously. However, the distinction lies in how these tasks are handled and executed.

- Concurrent computing focuses on dealing with multiple tasks at the same time, but not necessarily executing them simultaneously. It gives the illusion of multiple tasks running in parallel by interleaving operations.

- Parallel computing, on the other hand, involves performing multiple tasks simultaneously. It is a subset of concurrent computing but focuses on the simultaneous execution of multiple tasks, typically on different processors or cores.

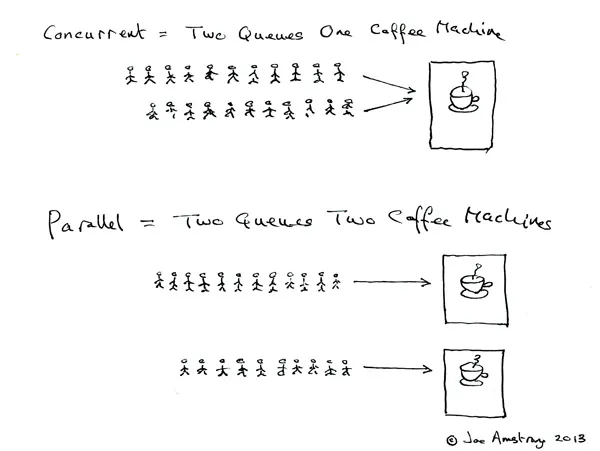

Analogies

Example 1

Concurrent: Two queues to one coffee machine

Parallel: Two queues to two coffee machines

Example 2

Concurrency: When I am not at home, my wife takes care of my child while managing household tasks.

Parallelism: When I am at home, I take care of our child while she handles the household tasks.

Concurrent Computing

- Concurrency is a programming model where multiple tasks can progress at once.

- This model doesn’t require these tasks to be executed in parallel; rather, tasks are interleaved by switching between them.

- It’s primarily useful when the system has to handle tasks such as I/O operations (disk access, network requests) that spend a lot of time in the waiting state.

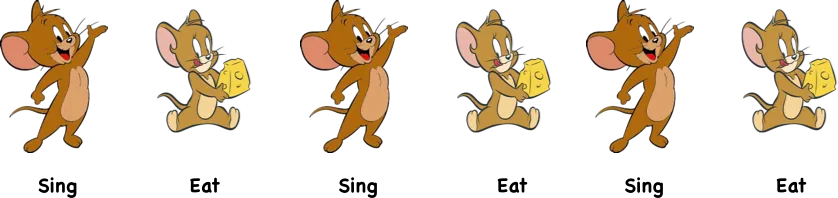

- Consider you are given the task of singing and eating at the same time. At a given instance of time either you would sing or you would eat as in both cases your mouth is involved. So in order to do this, you would eat for some time and then sing and repeat this until your food is finished or the song is over. So you performed your tasks concurrently.

- In a single-core environment (i.e. your processor has a single core), concurrency is achieved via a process called context-switching.

Key Characteristics of Concurrency:

- Task Interleaving: In concurrent systems, tasks take turns sharing CPU resources. Even though only one task might be using the CPU at a time, multiple tasks progress together by switching between them.

- Asynchronous Operations: Often relies on asynchronous execution to prevent blocking one task while waiting for another to finish.

- Single Processor or Multi-Processor: Concurrency can occur on a single processor by context-switching between tasks or on multiple processors where tasks may run simultaneously

Benefits of Concurrent Computing:

- Responsive Systems: Concurrency allows systems to remain responsive, especially in user interfaces (UIs), web servers, and other applications where tasks are waiting for resources or input.

- Better Resource Utilization: Systems can utilize I/O and processing time more efficiently by keeping processes that aren’t CPU-bound busy while others are waiting for input/output.

Examples of Concurrent Systems:

- Operating Systems: Most modern operating systems (Windows, Linux) are concurrent, allowing multiple processes to run simultaneously by rapidly switching between them.

- Event-Driven Web Servers: Web servers like Node.js use asynchronous I/O to handle many requests concurrently, making them highly scalable.

- User Interfaces: Applications with a graphical user interface (GUI) run the UI and background processes concurrently, ensuring the UI remains responsive.

Parallel Computing

- Parallelism refers to a programming model where multiple tasks are executed at the same time on different cores or processors.

- Parallel computing is typically achieved by dividing a larger problem into smaller sub-tasks that can be computed independently and simultaneously.

- Consider you are given two tasks cooking and speaking to your friend over the phone. You could do these two things simultaneously. You could cook as well as speak over the phone. Now you are doing your tasks parallelly.

Key Characteristics of Parallel Computing:

- Simultaneous Execution: Unlike concurrency, where tasks are interleaved, parallel computing ensures that multiple tasks are running simultaneously on multiple processors.

- Multi-Core Processing: Requires systems with multiple cores (or processors) to handle tasks in parallel, improving the computation speed significantly for large, divisible problems.

- Data Parallelism vs. Task Parallelism:

- Data Parallelism focuses on distributing data across different parallel computing nodes to perform the same operation on each subset.

- Task Parallelism distributes different tasks (or functions) across parallel computing nodes to execute different computations simultaneously.

Benefits of Parallel Computing:

- Improved Performance: Parallel computing is particularly useful for tasks that are computation-heavy, such as scientific simulations, graphics processing, and machine learning models.

- Efficient Resource Utilization: Utilizing multiple CPU cores simultaneously allows for faster problem-solving and better use of available hardware.

- Scalability: With the growth of multi-core processors, parallel computing allows programs to scale efficiently as more resources (cores) are added.

Examples of Parallel Systems:

- Scientific Simulations: Parallel computing is extensively used in fields like physics, chemistry, and biology for simulations that require significant computational power.

- Graphics Processing: Modern GPUs (Graphics Processing Units) are designed for parallel computation, handling thousands of parallel threads to render images quickly.

- Machine Learning: Large-scale machine learning models, especially deep learning, require parallel processing to train models on vast datasets across multiple cores or machines.

Differences Between Concurrent and Parallel Computing

Though both concurrency and parallelism involve dealing with multiple tasks, their approaches and use cases differ significantly.

| Aspect | Concurrent Computing | Parallel Computing |

|---|---|---|

| Execution | Tasks are interleaved, not necessarily simultaneous | Tasks are executed simultaneously |

| Hardware Dependency | Can work on a single core through task switching | Requires multiple cores or processors |

| Task Handling | Focuses on handling multiple tasks or requests | Focuses on dividing tasks for simultaneous execution |

| Primary Use Cases | I/O-bound tasks, event-driven applications | CPU-bound tasks, scientific computing |

| Example | Web servers, operating systems | Graphics rendering, machine learning |

| Performance Focus | Responsiveness and task management | Speed and efficiency in processing |

Challenges in Concurrent and Parallel Computing

While both models bring significant benefits, they also introduce challenges in implementation.

Challenges in Concurrency:

- Race Conditions: Concurrency can lead to race conditions, where multiple tasks try to modify shared resources at the same time.

- Deadlock: When tasks wait indefinitely for resources locked by each other, causing a halt in the system.

- Synchronization: Managing the correct sequence of operations between tasks is critical to avoid issues like deadlock and race conditions.

Challenges in Parallelism:

- Load Balancing: Dividing tasks equally among processors can be difficult, leading to uneven distribution of workloads.

- Communication Overhead: In distributed systems, the time spent on communication between processors can diminish the performance benefits of parallel execution.

- Data Dependencies: Ensuring that tasks executed in parallel do not depend on the results of other tasks can be challenging, requiring careful programming to handle dependencies.

When to Use Concurrent vs. Parallel Computing

The decision to use concurrency or parallelism depends on the nature of the problem being solved:

- Concurrency is best suited for I/O-bound tasks, where tasks spend a significant amount of time waiting for external resources (e.g., user input, and network data). Web servers, file management systems, and GUIs benefit greatly from concurrency.

- Parallelism excels in CPU-bound tasks where computation time is the bottleneck. Scientific simulations, machine learning model training, video rendering, and matrix computations are ideal candidates for parallel computing.

In practice, many systems are both concurrent and parallel. For example, a large web server may handle multiple client requests concurrently, while processing each request in parallel using multiple cores.

Concurrency and parallelism manifestation

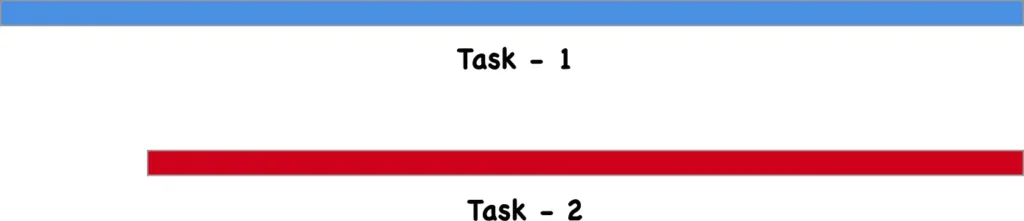

Case 1 : Concurrent and Parallel

- Multiple tasks are executed in parallel across multiple cores, and their execution may still involve interleaving.

- Consider a multi-threaded program in a system with multiple cores. Each thread is performing a different task. Since the system has several cores, each task can be assigned to a separate core and executed at the same time.

Case 2: Concurrent but Not Parallel

- A single-core processor running multiple threads. These threads are interleaved through time-slicing, where the CPU switches between threads rapidly, creating the illusion of simultaneous execution, even though only one task is actually running at any given moment.

- In this scenario, there is concurrency because tasks are switching back and forth, but no parallelism because the processor is only handling one task at a time.

Case 3: Parallel but Not Concurrent

- Tasks are running in parallel, meaning they are executed at the same time on multiple cores or processors, but there is no interleaving or switching between them. Each task runs independently on a separate core.

- There is parallelism because tasks are being executed simultaneously on different processors, but there is no concurrency since the tasks do not alternate or share resources.

Case 4: Neither Concurrent nor Parallel

- There is no concurrency and no parallelism. The system processes only one task at a time, sequentially, with no switching or simultaneous execution.

- There is neither concurrency nor parallelism. The system works on a single task sequentially, without switching or running other tasks in parallel.

Conclusion

Concurrent and parallel computing are vital techniques in modern computing, each suited to specific types of tasks. Concurrency allows for responsive systems by interleaving tasks, whereas parallel computing focuses on performing multiple tasks simultaneously to maximize performance on multi-core systems.

Understanding the distinctions between these two models is essential for designing efficient software systems, especially as hardware advances continue to increase the availability of multi-core processors. By leveraging concurrency and parallelism effectively, developers can create applications that are both responsive and capable of handling complex, large-scale computations efficiently.

3 thoughts on “Understanding Concurrent and Parallel Computing”